It’s been a while since I’ve posted on AI — between a new job and launching our Treasure Hunt, I’ve been quite busy the past couple months. (Go check out the treasure hunt, it launches soon and should be great fun!)

I certainly haven’t stopped using my AI tools, though, and I’m very excited about the latest updates to ChatGPT. I’ve recently built a multitask ChatGPT agent, which I’ll blog about soon, and I’m about to explore empowering that agent even more with connections via an API tool.

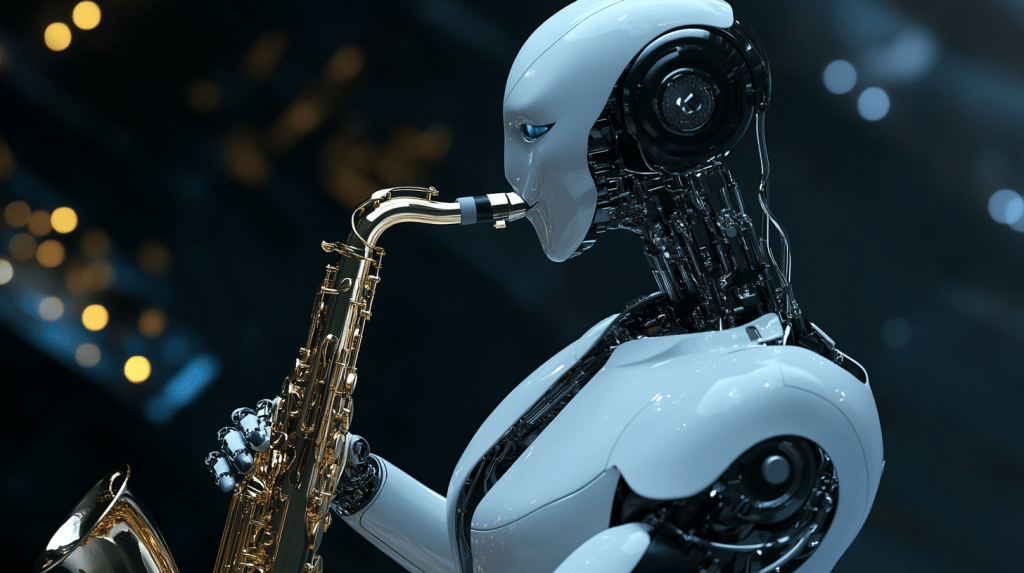

Today’s topic is still on music, though. In one of my favorite songs, “I.G.Y.” by Donald Fagen, there’s a very distinctive instrument playing a bridge and some fills. I thought it might be a saxophone with some weird mute, but since I know firsthand that mutes don’t work well at all in the sax, I’m mystified.

ChatGPT wasn’t, though. It very quickly identified the sound as most likely Fagen playing a synthesizer.

Okay, mystery solved. I think. Because now I’m wondering, how does ChatGPT know that? Did it analyze the track acoustically, or did it find references online?

According to ChatGPT, it’s the latter. “My response was derived from training data (interviews, liner notes, credible music analysis) and public information about Donald Fagen’s use of synthesizers on The Nightfly.”

Great, ChatGPT looked the information up in areas of the ‘net that I couldn’t find with traditional search engines. However, when answering my question, ChatGPT added that the solo “is frequently mistaken for a sax.”

Naturally, I asked how ChatGPT arrived at that conclusion, since it seems very objective. Its reply is interesting. It can’t find any direct quotes of people saying, “I thought that was a sax,” but it was able to reference numerous threads on Reddit and other sites where people discussed the synth work and referred to the sound as “sax like.”

And that takes me back to one of my favorite topics: how trustworthy is any AI answer? I’d classify this one as pretty good, but still a hunch, and it’s important that users recognize when they’re getting a hunch versus definitive fact. So, also coming soon…how do we develop a way to have our AI agents reflexively signal a useful confidence level with any particular response?

Leave a comment