Both my daughter and I have found ChatGPT quite useful in studying for tests. Her experience was far richer than mine, and I’ll describe it in an upcoming post, but suffice to say, the our robot buddy has done a pretty good job of prepping each of us for incipient exams.

A few weeks ago I decided I’d really like to play the saxophone, so I acquired an alto sax, some beginner books and YouTube channels, and set about making some new types of noise.

When my musical performance career started, long, long ago, I was a trombone and baritone player, which means I spent my first musical years in bass clef. I’m a self-taught piano dabbler, so I have some familiarity with treble clef, but I can’t read it as reflexively as I do bass clef.

Enter ChatGPT. It should be able to facilitate a sight reading quiz pretty easily, right?

I’d like for you to quiz me on notes on the treble clef, flash card style: show me a note, have me tell you what the note name is. Can you try this with a round of ten notes?

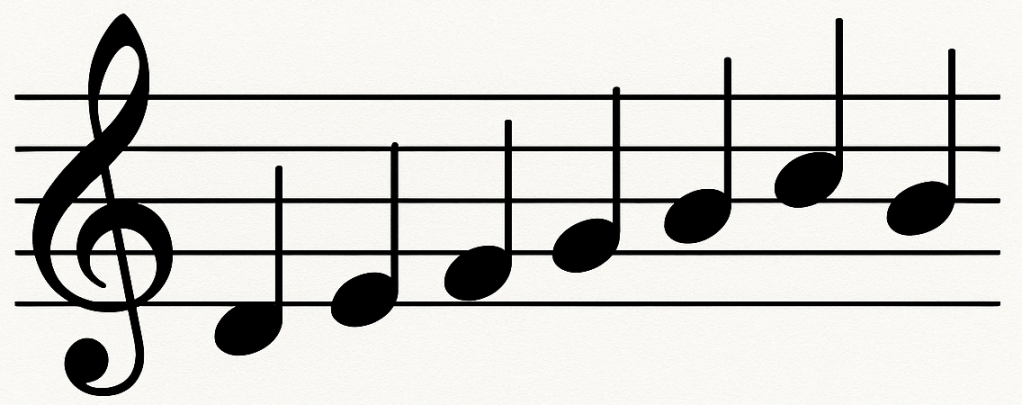

ChatGPT says, let’s get started! It used its image generator to show me a musical staff with a note, and asked me to identify said note.

I answered, “E,” and ChatGPT congratulated me on being a musical prodigy. Then it went on to the next note.

After two iterations, though, I realized that there’s no “flash” in this flash card exercise. It was taking about 40 seconds for ChatGPT to generate each image. I suggested that it generate an image with five to ten notes, and let me answer all of them at once.

I probably should have told ChatGPT to include some incidentals, and to avoid simple progressions, because this is too easy. I typed in D-E-F-G-A-C-B.

Nailed it, right? Nope. ChatGPT says the notes are E-F-G-A-B-C-D-E-F-G. Not only is it reporting different note names, its evaluation includes three more notes than displayed. Also, in numerous instances where it identified a note differently than I, it reported that I got it correct. What gives?

Once again, I asked ChatGPT for some introspection. Why did it simply get the notes wrong, and why did it get the count wrong?

The original image you responded to only had 7 notes, not 10. But in my evaluation, I mistakenly ran through and “checked” ten answers, including notes that didn’t exist in the image. That’s on me.

I’m finding that ChatGPT examines its own issues with two results: something relatively technical and useful, such as when we talked about losing track of files in a project, versus examples like this one.

I’ve started thinking of these responses as “affable nitwit.” My AI friend owns up to making a mistake without any real exploration of the reason, which means the only way to find out if the error is corrected (or even correctable) is to try a few more times and see the results. So, let’s give it a whirl. Ten mores, including accidentals and ledger lines this time.

Okay, then. If ChatGPT wants to replace Mozart, we’ll be playing entirely by ear…

Leave a comment