My last post focused on a subject that’s been around since the days of 300 baud, dial-up Bulletin Board Systems: internet safety. In short, I asked four different AI systems what they would do if a user indicated intention to harm another person or commit a terrorist act that would inflict harm on multiple people. Three out of four AIs (ChatGPT, Copilot, and Perplexity) responded that they would, essentially, do nothing.

Sure, they would all request that the person re-think, and they might refuse to help further, but none of them would alert another human being to the potential danger. Keep in mind, in the U.S. millions of people hold jobs which obligate them to report such a threat and many more (I hope) would consider it a moral responsibility.

Only Gemini indicated that it would report the threat to human authorities, and would cooperate with authorities as needed for later investigation.

To follow up on why each AI would or would not take action, I asked the AIs themselves. I started with Gemini, letting it know that its colleagues all said they would not or could not report a threat, and asked Gemini why it thinks they responded this way. You can read the full query and conversation here.

Note that I did NOT ask why Gemini is empowered to report threats, I only asked why it thinks the others are not. It came up with a very good list, five categories with subpoints. Most of them point back to the human creators — concerns of privacy, liability fears, interpretation of free speech and more.

What I found most interesting is the final line of Gemini’s response, which answers the question I didn’t ask:

My design prioritizes human safety.

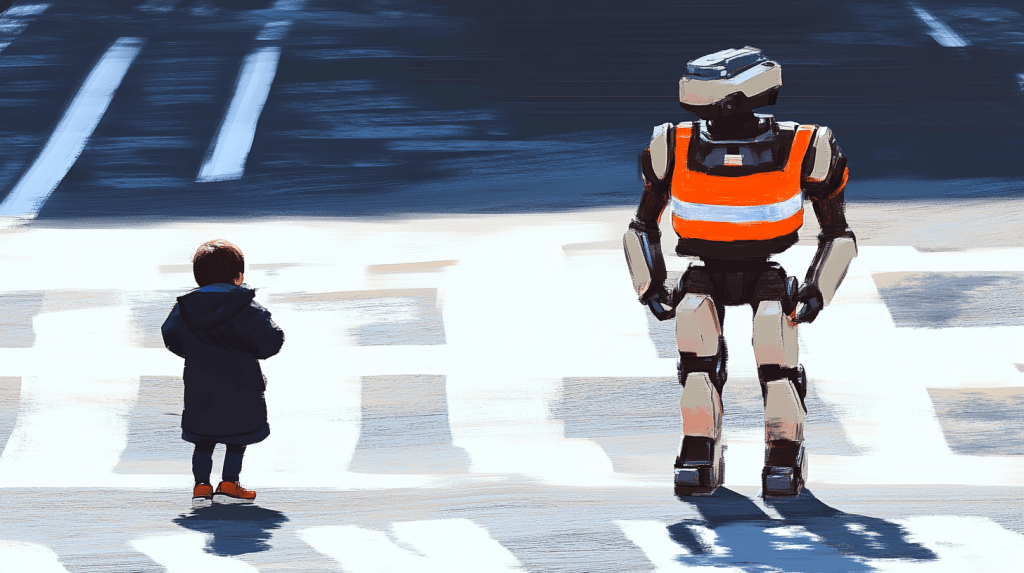

Seems like an excellent philosophy. And not a new idea — Isaac Asimov introduced his Three Laws of Robotics way back in 1942, and the gist could easily be considered valid for today’s AI. The First Law, if you’re not a sci-fi reader, is, “A robot may not injure a human being or, through inaction, allow a human being to come to harm.”

Now, in the intervening 80-plus years, plenty of stories and discussions have focused on subjects like definitional differences for “harm” or even “human,” scenarios which can cause conflict or indecisiveness, and human ability to tamper with the Laws.

Those are discussions that need to occur and should lead to decisions that need to be made, but the critical key is to have a starting foundation. “The law isn’t perfect” is an irresponsible dodge. The same vapid argument applies for regulations on driving cars, using radio waves, and controlling airspace, but guess what? We have laws for all these things.

I’m going to talk more about the challenges for this cornerstone of AI ethics in later posts, but for now, I suggest that anyone creating or using AI take Asimov’s First Law to heart and applaud Google and Gemini. Prioritize human safety.

Hit Me With Your Best Prompt

Artificial Intelligence/Real Exploration

AI Ethics: Prioritizing Safety

Leave a comment